FUN, MODELS & PEDANTRY

The Funderstanding Series

Squid Game Theory

"Nash Equilibria in Korean Death Games"

October 2021 | By Daniel Dugas

Are you into sadistic versions of children's games? Or are you simply very desperate for money? In this post, we will look at basic game theory concepts and how they apply to the currently immensely popular series "Squid Game". Hold on to your tracksuits!

Spoiler alert! The whole post is one big spoiler. Do not read any further if you plan to watch the show.

Without game theory, sure, you'll have fun. And you'll lose.

But learn it? Now you can over-analyze everything (and lose anyways).

"Game Theory? You mean, like, the study of Call of Duty?"

Game theory is a fascinating topic, but the name can be misleading. It's actually not about games, in the sense of "things we do for fun". It's about games, in the sense "decision making in the presence of other rational agents". Despite the name, Game Theory is a very serious topic. It describes how countries might go to war even though it's worse for everyone involved, or how smart people might compete with each other when co-operation is clearly better, how economies can end up letting people starve even when there's enough food for everyone.

And, lucky for us, it also readily applies to the theatrical, sadistic contest that the characters in Squid Game find themselves in.

We'll tackle the games one by one, in order. Are you ready? Get set...

You want to know true fear? If this doll catches you scrolling, it publishes your entire internet history on twitter.

First Game: Red light, Green light

The first game clarifies that rules have serious consquences. It can also be considered the most tragic game, simply because of the sheer amount of unexpectant players ending up murdered.

Yet, there is very little 'game' here in the 'game theory' sense. One tough decision is how fast you should try to move, to maximize the odds of making it before the timer runs out. Maybe running on all-fours leads to a more stable waiting position? You probably don't have time to find out. Follow the rules and hope for the best, even if you do everything more or less right, there seems to be luck involved in the movement detection.

But game theory is about how our decisions and those of others interact, and this game is mostly a big free-for-all.

One of the most dramatic moments is player's 199's action to save the main character, at enormous risk to himself is so selfless that it is almost irrational (Which makes it all the more a heroic and human act. Possibly the most heroic in the entire show, given how much danger it places on oneself for a slight chance of saving someone!) But what does it mean to be irrational, or rational, in game theory?

In simple, non-repeated games, a rational agent is one that always: 1) knows what they want 2) picks the choice which give them the best outcome

We'll see how these two properties lead to the basic theories of decision-making later. For now, we have a choice to make...

Sure we have a lot of churn, but the customers which make it all the way past our funnel give us fantastic reviews.

Interlude: Going Home, and Utility Functions

So you somehow survived the first game. Congratulations! Even more incredible, after an extremely tight vote, the game organisers let you leave. Just like that, sent you on your merry way. A little sleeping gas, some hand-tieing, and here you are: safe and home. Except... it seems someone has changed their mind. You could stay here, or, you could go back to the game. What should you do?

At that point, one could argue: if your aim is to save yourself and others, the best thing to do is to not return. As previously mentioned, the organisers have very little incentive to actually give you anything if you do (somehow) win: they can dispose of you at almost no cost to themselves (what's one more murder to an organisation which just massacred hundreds?). They have no public image to uphold, since they are operating in complete illegality. And any surviving players are also a liability. But $40M is a lot of money. And some don't have much to live for, anyways. So going back could be the chance of a lifetime?

What does game theory have to say about this dilemma? Well, what is clear is that you have at least two options: going or not going. Less obvious, the game organisers also have several options: try to kill every participant to tie up lose ends (even if they return, and win), or stay true to their word. In game theory, these possibilities are typically represented with the normal-form matrix: each side of the matrix represents a player, and lists the decision they can take. For each decision pair, the players get a pair of outcomes: the left is your outcome, the right is the other player's outcome. Let's write out this game's normal-form matrix:

| murder every player | uphold pledge | |||||

|---|---|---|---|---|---|---|

| flee | probably live + poor | | | no show + keep money | live + poor | | | no show + keep money |

| participate | die + poor | | | hit show + keep money | probably die + maybe rich | | | hit show + lose money |

Each row is one of your possible decision: Flee or Participate. Each column is one of the other player's decisions: Murder every player or Uphold pledge. Each decision pair leads to an ouctome pair. For example the decision pair {You: Flee | Them: Murder every player} leads to the outcome pair {You: probably live + poor | Them: no show + keep money}. For brevity, we write decision pairs using the first letter of each player's decision: {You: Flee | Them: Murder every player} becomes FM.

This payoff structure is barely a game. there is almost no dependence: the organisation's choice will not be affected by what they think players will do, only by whether they care more about money vs. keeping their word.

On the other side, as a rational player, does the tiny chance of winning outweigh the price of death?

A utility function is a player's answer to that question. It's a function that ranks states of the world by agents' preference. a normal person might rank

live + poor > probably live + poor > probably die + maybe rich > die + poor *

* Of course, the ranking will depend on the individual payoff probabilities. It's the rational agent's (you) job to figure those out, then rank outcomes from favorite to least favorite. Here, we just assume those probabilities are fixed (say, 1:456 chance of winning, 455:456 chance of dying) and rank accordingly.

let's use the short notation instead:

FU > FM > PU > PM

whereas someone who cares much less about their life, and much more about money, would have a utility function which ranks:

PU > FU > FM > PM

On the other side, a reasonable utility function for a criminal organisation could be:

PM > FU = FM > PU

But, just maybe, the organisation could really be in it for the ideals, in which case:

PU > FU > PM > FM

in game theory, it's typical to represent the value of outcome with arbitrary numbers (1, 2, 3, 4). The exact value doesn't matter, only that 4 is better than 3 which is better than 2, and so on. Thanks to this utility function, we can now simplify our normal-form matrix:

| murder every player | uphold pledge | |

|---|---|---|

| flee | 3 | 2 | 4 | 2 |

| participate | 1 | 3 | 2 | 1 |

normal player, normal org

| murder every player | uphold pledge | |

|---|---|---|

| flee | 3 | 1 | 4 | 3 |

| participate | 1 | 2 | 2 | 4 |

normal player, idealistic org

The first thing we notice is that the normal person would rationally always flee. It doesn't matter what choice the organisation makes, or even what its utility function is, the normal person always gets a better payoff by fleeing. This is called a Dominant strategy. When a player has a Dominant strategy, and the other doesn't, they lead while the other player is forced to follow. It's also possible that both players have a dominant strategy: that's the case here. In both the left and right matrix, neither player's actions influences the other.

But what if the player is greedy and suicidal?

| murder every player | uphold pledge | |

|---|---|---|

| flee | 2 | 2 | 3 | 2 |

| participate | 1 | 3 | 4 | 1 |

greedy player, normal org

| murder every player | uphold pledge | |

|---|---|---|

| flee | 2 | 1 | 3 | 3 |

| participate | 1 | 2 | 4 | 4 |

greedy player, idealistic org

What if the greedy player believes the organisation is normal (left matrix)? Here we have an example of one-sided dominance: it's the org which will always pick murder, no matter what the player chooses - its payoffs are always bigger on the left column. As a result, the greedy player has to follow: they know that the org will choose murder! So, they must decide to flee. This (FM) is the Nash equilibrium. It's the point where no player gets a better outcome for themselves by changing their decision. I've marked it in bold in the payoff matrix. Notice that the Nash equilibrium does not lead to maximum overall payoffs (2+2 < 4+1). This is an important lesson in game theory: Nash equilibria prevail, even though a different strategy could be better overall (sum of players payoffs), or even better for everyone (every player maximizes their utility).

Now, what if the greedy player believes the organisation is idealistic (right matrix)? Here, once again the org's decision is not influenced by the player: Uphold pledge dominates Murder every player. Knowing this, the greedy player will always decide to play. The result is a stable Nash equilibrium, PU. Luckily, this Nash equilibrium is also the optimal outcome! Unlike the previous games, this is an example of a cooperative game, where both players will have no issues agreeing, and getting what they want. Also, all the games we've seen so far are asymmetric: each player has a different role, as the payoff matrix is not symmetric.

Enough theory, and back to our decision: You must figure your utility function, and how likely you think you are to win, and survive. Do you value your life more than money? Flee. Do you thinks the org cares about keeping its word? If yes, play. Otherwise, flee.

If you do decide to play, you must play to survive: given the attrition on the first game, it is clear that very few will remain after 5 more such games, possibly the organisers plan on having a single winner or even none. If any games are competitive (you don't know yet), any non-machiavellian kindness will reduce your odds of survival and increase someone else's (poor player 199) , in the grand scheme of things.

And yet you can decide to cooperate anyways, after all, you are human. In theory, it can still be rational to cooperate, depending on your utility function (your core values): how much do you value your life? the life of others? the prize money?

Make sure that you have a clear answer for these questions before you proceed, otherwise your decision making will be inconsistent at best.

in hindsight, maybe I shouldn't have picked the mandelbrot fractal shape

Second Game: Honeycomb, a Tragedy of the Commons

Once again, it looks like luck will play a big part to decide your fate. The best way to increase your odds, this time, is to have good knowledge of previous-generation korean traditions. As luck would have it, that's your speciality (also, a potential ally told you that sugar was being prepared in the kitchen).

Having figured out the next game, like player 218, you find yourself having to choose: do you share the information with your teammates, or do you betray them? A tough decision!

Or... is it?

Game theory says: let's look at the payoffs. If the player shares, he increases his teammates chance of survival. If he betrays, his teammates are more likely to die.

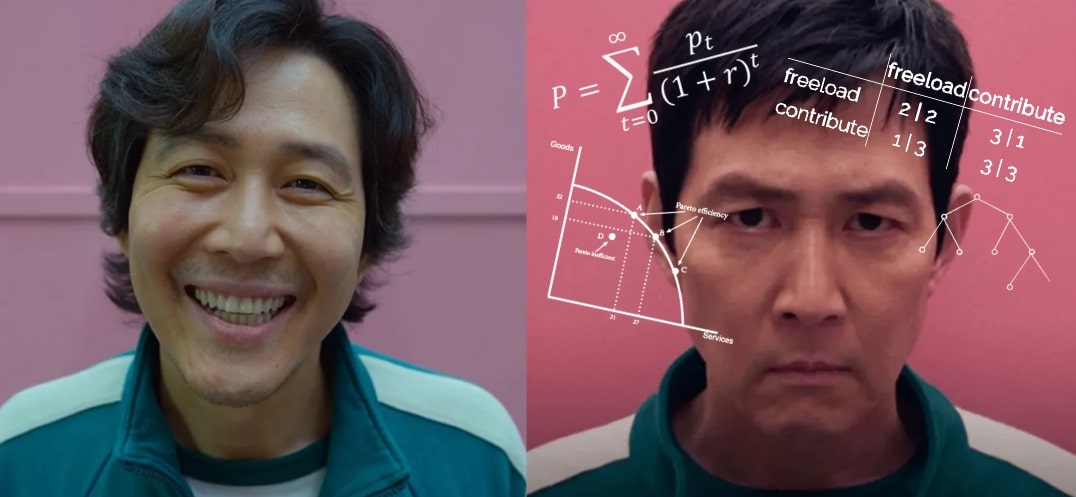

Which is better? One factor here is whether the contest will stay 'cooperative', or become 'competitive'. In the first case, there's no reason not to help your teammates, so let's assume that things will turn competitive (which 218 seems to think). Now the utility takes a familiar form. Less people is better for me, more is worse. However, I am not the only person making this choice: other people can also choose to contribute to 'thin the herd' (betray, kill, etc) or freeload (watch your own back, and let others do the thinning). Did someone say, normal-form?

| freeload | contribute | |

|---|---|---|

| freeload | 2 | 2 | 3 | 1 |

| contribute | 1 | 3 | 3 | 3 |

I rank outcomes in that order, because a single player's impact on the actual size of the surviving cohort is actually small - for #218: 3 players more or less depending on whether he betrays, out of 100 players. Not exactly a strong competitive advantage. It's also not an advantage in the sense that it would equally advantage all of those who would make it to the next game, not just the player himself, and we've assumed the game is competitive.

This situation is actually quite familiar: it's an example of a classic Tragedy of the commons social dilemma, which happens all the time in real life. It's a symmetric, game with a non-cooperative Nash equilibrium (FF). Another name for this game is the Commonize Costs–Privatize Profits game. doing the social thing yourself (here, 'thinning the herd') is good for everyone, but not doing is almost as good for you. Since you expect others might freeload, as a rational player you have to, too.

In #218's case, helping his friends can gain him trust and valuable allies, which further incentivizes freeloading. This flips the payoffs and makes sharing the information the dominant strategy! Interesting that the character shown to be most traditionally educated, rational thinking, and sociopathic doesn't follow his dominant strategy. (In game theory, this can be evidence that the player's utility is not what we think. Could he be optimizing for maximum drama?)

In the real world, this is a typical problem that happens at all scales of human organisation, from small tribes to international treaties (e.g. global warming). One way that societies overcome these social dilemmas is through pre-commitment - giving up agency to create credible threats of punishments for freeloaders, which changes the payoff matrix. Police, governments, united nations, are all examples of attempts to do this. But we often underestimate how hard these strategies are to enforce [2].

Of course, you might be thinking that reputation, or morals are other reason for cooperation in such a case, and you'd be right. Morals can be thought of as terms in your utility function which alter payoff (like a self punishment for being 'bad'), and they are a powerful aspect in real decision making (however, when betraying your friend in a death game is the 'social good' option in the payoff matrix... we're probably a little past morals). As for reputation, I won't get into repeated games here, but if you're interested, it's fascinating topic (see [1]).

Think that's a bad night? I once had a nightmare that my friends found my reddit account.

Interlude: the Night is Dark

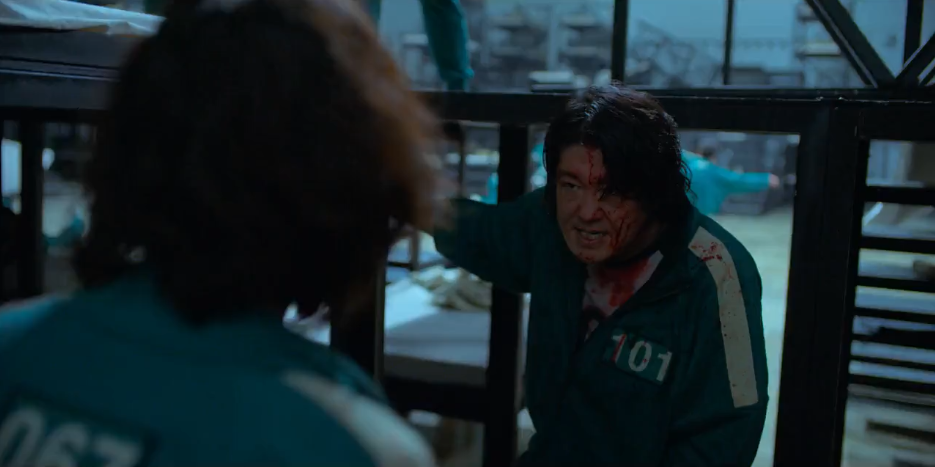

After the second game we see a general shift towards competitive mentality in the players. As the first night approaches, our protagonists realize that people are going to attack each others in the dark, to reduce the number of competitors.

Putting aside the tragedy-of-the-commons aspect of this situation, which we already discussed, there's another interesting dynamic at play.

There is safety in numbers: forming teams allows player to better defend themselves. However, this also implies trusting your teammate's, making you vulnerable to betrayals from them (and them vulnerable to your own betrayal).

A reasonable utility function for a nice person is:

PP (harmony) > BB (both betray) > BP (i betray them) > PB (they betray me).

And for a psychopath:BP (i betray them) > PP (harmony) > BB (both betray) > PB (they betray me).

First, what if both teammates are psychopaths?

| protect | betray | |

|---|---|---|

| protect | 3 | 3 | 1 | 4 |

| betray | 4 | 1 | 2 | 2 |

Uh oh, things are about to get nasty.

Straight away, we see something unlike the previous games. This structure has not one, but two Nash equilibrium (BP and PB)! On top of that, both are pretty terrible.. Despite being symmetric, games like these are unstable: a small difference in conditions can decide the winner. This paints a grim picture of our two psychopath's second night in the dormitories: the first one to betray the other dictates the game, and so wins.

"Hey!" you object. "I don't care about that situation, I'm not a psychopath!" Indeed, how rude of me! Here, the normal-form game between you (nice person) and a psychopath:

| protect | betray | |

|---|---|---|

| protect | 4 | 3 | 1 | 4 |

| betray | 2 | 1 | 3 | 2 |

oops, the psycho would dominate that game, and you'd both end up in BB. Not a pleasant night. Let's try putting you on a team with a nice person instead.

| protect | betray | |

|---|---|---|

| protect | 4 | 4 | 1 | 2 |

| betray | 2 | 1 | 3 | 3 |

There's a Nash equilibrium at PP, Nice! But wait: there's also one at BB... Is that bad?

This game is in the class of coordination games: both of you would rather do the same thing than not do the same thing. The good news is, if you're already at (PP), neither of you is going to move away from it unilaterally, so you can breathe. But. If you're both starting in a sub-optimal situation (BB), it will be hard to get to (PP), because you need both players to do it simultaneously (otherwise, they both lose out). In game theory, PP is said to be Pareto-optimal - there's no other outcome in the matrix with better or same payoffs for both players.

In practice, those games feel like a careful simultaneous dance towards an optimum. For example, overthrowing an unpopular dictator is also in this class of game: if you can get everyone to commit, the rebellion will be successful. However, if you fail to coordinate, and only some people go out into the street, the rebellion will fail and the price they pay will be steep.

She's thinking about Nash's seminal work on bargaining theory

Third Game: Tug of War

the only decision in this game is who to team up with. However, without information on the next game, it is hardly a decision problem, more of a test of your ability to guess ahead, and your physical value as a player.

Looking at competitive tug-o-war videos, you can see that the technique described by player 001 is indeed proper (and could maybe turn the tide against a heavier, stronger team?). However, the three-steps-forward wager doesn't look like it would actually work: competitive players sometimes end up lying almost completely flat on the floor, and still apply close to maximum force.. Another piece of bad news for you, unlike dramatic netflix shows, real life doesn't care that you're the main character.

Basically, while it looks like a skill / strength test, this game is mostly luck-based, if you build the wrong team or unluckily draw to play against a stronger team, you're toast.

There is one possible winning move here for the main characters: once the game is announced, and the precarity of their position as a weaker team becomes clear, it would be a good time to try for a go-home vote. Given that half the players will be in weaker teams, enough people might be convinced to vote out of this game and go home. Stronger players might try to argue against but would be less convincing given their clear motives.

I'll let you model that one, I hear the next game is about to start.

I think this guy may be starting to lose his marbles

Fourth Game: Marbles

This game is deceptively simple: the only rule is that you must convince your opponent that you should be the one to live (giving you all their marbles). If neither of you can convince the other, you both die.

The games played are all distractions from that essence, because the core game is unsolvable. The game is symmetric, and there can be only one winner.

| keep | give | |

|---|---|---|

| keep | 2 | 2 | 3 | 1 |

| give | 1 | 3 |

In game theory, this is a Bargaining game. This particular variant is a cruel decision problem. Even though death is guaranteed, rational agents would not be able to reach a compromise, since the outcome of surrender is worse than the outcome of stalling. What makes it worse is that the 'both refuse to yield' outcome has a slight allure: 'sure, it's unlikely, but what if they don't kill us when the clock runs out?'

This is where human instincts kick in to solve the unsolvable problem. By pre-committing to respecting the outcome of an unrelated, 'fair' game, the players can turn the stuck decision problem into a seemingly unbiased skill / luck game that they have a good chance of winning.

Notice that we do not see the soldiers actually enforcing the internal rules of the made-up game. When the hero loses his last marble (according to the agreed-upon rules), he does not get shot. Only when people willingly surrender their last marble do they get eliminated.

If people realize this, they can choose to defect the agreed-upon game (since there is no way to effectively pre-commit) - this happens with the gang member, who switches internal games when he realizes he will lose the agreed-upon one.

What I find amazing here, is how natural the behaviors in the show seem to us. A rational machine would never surrender the last marble, as it is a guaranteed loss (at that point, reputation, ego, all is irrelevant as it is the last thing you'd ever do). Instead, we humans appeal to fairness, create pretend games and stick to our commitments even when we theoretically don't have to. Is this the results of years of evolution, giving us biases and common language / rules to allow us to solve stuck decision problems? After all, many real problems are like this, where two parties have to decide who gets the biggest slice of the pie, and violence is punished externally.

In fact it almost feels like we're built to solve situations like these (maybe because tribes which can solve bargaining problems can maximize total utility, and so becomes dominant over tribes that don't), As a result, most teams are able to get a winner. This would certainly not be the case with rational agents.

(Note that another way out - shown in the episode - is to associate a positive outcome to the other person living on: if you like the other enough, you could rationally decide to self-sacrifice and still increase your utility function.)

There are not many tips here: a ruthless player should seek to abuse the tendency of people to stick to their commitments, by trying to win the agreed-upon game by luck / skill, and if things don't go their way: revert to the 'stuck' scenario (refusing to play further), change the game, convince or even trick the other. Note that this is considered a 'dick move' in human culture, and history has many cases of people choosing death over dishonor. Note: I'm not advocating either! This is a good opportunity to ask yourself what your values are.

Unlucky for me, I've been practicing a totally different game of "Bridge"

Fifth Game: Bridge

At first glance, this would seem to be another straightforward luck-based game (unless you can somehow predict what the game will be). The first player has a 1:2^16 odds of surviving, the second 1:2^15*, and so on . Basically, only the last few players have any chance to make it.

* actually 1:(0.5 * 2^15 + 0.5 (0.5 * 2^14 + 0.5 (...)).

However, there's a hitch: the clock is ticking, and you can't be sure that the people in front of you will risk jumping on the next pane. In fact, why would they? Well, they can wait (guaranteed death), or they can move (unlikely but possibly survival). The choice which maximizes expected payoff is clear. Unless..

What if there's a third option? So far, we've treated this as a single-player decision, but there's other players, and they are motivated to survive too. We've assumed that the other players can only wait, but what if they're allowed to overtake? Let's add this option to the decision matrix.

| stall | overtake | |

|---|---|---|

| stall | 1 | 1 | 3 | 2 |

| move ahead | 2 | 3 |

wait... we've seen something similar in the marble game...

It's again a bargaining game, but this time with a slight preference toward moving over stalling. Does that change much?

Well, this game is similar to the game of Chicken (a.k.a Hawk-Dove, made famous by a scene in Footloose in which two drivers are driving towards annhilation, and the last one to swerve wins. In our bridge-crossing game, there is no option for both players to yield, but that doesn't affect the core mechanism: Chicken is an anti-coordination game, which is also competitive. It has two Nash equilibrium states, and the winner is the one who most convincingly shows the other that they will not yield.

Exemplified: the driver who removes his steering wheel from the car forces the other to swerve, and wins. Here it would be hard to do so, as removing your possibility of moving forward also removes your possibility of winning if others solve the game. But the more convincing you are that you will not move, the more likely it is that the others will (and vice versa).

In this game, once again, human biases come into play - appeal to fairness / agreed-upon rules 'you should move, you have the lower number. we agreed earlier that the lower number goes first'. Of course, to a rational agent, if these don't affect the payoff (if you don't get shot for overtaking), they're irrelevant.

A hiccup in your brilliant stalling strategy: the other players realize they have a third option - shoving the staller.

This complicates our payoff matrix a little: when shoving, others incur some cost (risk) to themselves, but a larger cost to you, and so it can act as a punishment. Knowing about this punishment can be a strong enough deterrant that it leaves you with no other reasonable choice than moving ahead.

| stall | overtake | shove | |

|---|---|---|---|

| stall | 1 | 1 | 3 | 2 | 1 | 3 |

| move ahead | 2 | 4 |

I never said we couldn't add columns.

this is bad news: the other players will always prefer shoving, which makes moving ahead the only rational decision.

That is, unless you can make shoving you less attractive than overtaking you? Player 101 almost manages to pull this off (turning things back into a bargaining game), but his plan fails due to a spiteful ex-teammate.

Would you be able to outrace the timer? Due to the back-and-forth nature of rational strategies in this game and the clock, it seems likely that some of the time, nobody would make it past the bridge.

Consider yourselves lucky, the original plan was to leave each player a spoon after the meal.

Interlude: Three's a Crowd

You somehow made it past 5 games, and even got a nice steak dinner before heading to bed. Now the lights just went off in the dormitory, you have a knife in your pocket.

Should you attack the others? Or just focus on defense?

Let's say that every attacker increases the risk for their target(s) to die by a lot, and for themselves to die by a little. But, if no one chooses agression, all three survive, and have to face off in the next game (unknown odds, probably close to 1:3).

As a result, there's not much game happening here: your strategy is dominant either way, it simply depends on what you believe the odds to be. You will attack if you think your odds of coming out unscathed (1:?) are better than your odds of surving an unknown game (~1:3?). Otherwise, you won't.

This is a good reminder that in decision theory, probabilistic estimates are essential. Every decision you make implies a utility function, and some belief of the outcome probabilities, whether you think you have one or not. If you don't explicitely decide what your utility is and what you believe is likely, your choices which oscillate, like two people trying to steer a car: the result will be worse than either person's steering. (Switching randomly between two utility function / probabilistic model pairs, you will get worse outcomes for either than picking one and sticking to it)

Hey, that's a silver lining right there: you can sleep easy knowing that you won't have to draw a normal-form matrix, for once.

Note: from the perspective of the organisers, this makes little sense. The risk that all participants will die from knife wounds is very high. If that happens, you lose the grand finale opportunity. Except of course if the organisers have a keen sense for drama, and can somehow predict that the players will only attack the weakest element (the injured player). Or maybe at this point they can clearly see the plot armor that some characters must be wearing.

Sixth Game: Squid Game

If you've made it this far, you would think that things are looking good. Since both have a knife, any physical game is pretty much a one-on-one knife fight for victory.

This is not as great as it seems: knife fights have three outcomes: 1) they die, you live 2) they live, you die 3) both die; if you're physically evenly matched, that's a 1:3 (or worse), chance of survival. Without knives, things are similar for 1 and 2, but 3) is eliminated (or close to eliminated), which raises both your chances of survival

My best friend is a dentist, and sometimes he gets really intense about flossing

This fact creates the opportunity for a quick coordination game before the actual contest: if both players can coordinate getting rid of the knife, they both increase their chances of survival.

does betraying the other during the knife discarding process give you an advantage? If yes, the payoff matrix is like this

| betray | comply | |

|---|---|---|

| betray | 2 | 2 | 4 | 1 |

| comply | 1 | 4 | 3 | 3 |

You might recognize the Prisoner's dilemma, which is probably the most famous normal-form matrix of all, in game theory. It's a symmetric, competitive game with a nasty Nash equilibrium. If the payoffs can't be altered somehow, rational agents will always betray. Even when real people play, prisoner's dilemmas usually end with mutual defection, and both players lose. And that's even though there's a better outcome for everyone! It's famous because it's so counter-intuitive. The best move is the worst move.

But don't despair: in our korean arena, things are not set in stone. If the players find a way to make betrayal difficult or useless, they can change the payoff matrix to favor cooperation more.

| betray | comply | |

|---|---|---|

| betray | 3 | 3 | 2 | 1 |

| comply | 1 | 2 | 4 | 4 |

The actual payoff might actually not be symetric, depending for example on whether there is imbalance (offence / defence)

How? For example, if both players put their knives on the floor simultaneously, then walk away from the knives one step at a time in sync until they are both in the squid (at that point, you are locked in the squid since the game starts), they can end up in this nicer payoff structure. Of course if one player runs for the knife, the other will too and both will be back to square 1.

But, like the night-protection game, this game is about coordination, and people can usually solve these: as long as we can make sure we move in sync, we can avoid the bad outcomes and skip to the good one. In game theory, this normal-form is called the Assurance game (or Stag Hunt).

Anyways, the players do not do this. They decide to have a dramatic knife-fight instead, in the rain. Could this hint towards a maximizing-drama term in their utility function? The main character ends up gaining the upper hand.

This is where one of the rare big redeeming moments in the show happen: the main character has all but won, and suddenly realizes his friend's death is not inevitable, it can be avoided if he forsakes the money. This is a true heroic moment, where the hero decides (possibly a little late) that a human life has no price. If, like me, this twist suprised you, you'll probably find that it serves as a good reminder: There are often solutions outside of the box. Like in the bridge-crossing, players can change the entire dynamic by coming up with a completely new course of action. The same can be true in life: we often restrict ourselves to the choices we are used to, without realizing that an entirely different path is available to us. This is why I feel inspired whenever I see someone braking their mind's restrictions in a way I did not expect.

Disclaimer:

Of course, all of this analytical theory is just that, theory. Real life can be fast and messy, and doesn't fit on a piece of paper. Also, I don't actually think you should become obsessed with game theory and write payoff matrices for every thing you do.

Still, concepts like the ones discussed here are neat, and can give us new ways of looking at old problems. And sometimes, a new angle can be just the thing to help you turn the tide.

Most importantly, I hope you find these ideas fun to play with. And if they made you want to dig deeper into this fascinating field, here's a few links I picked willy-nilly:

[1] Repeated games, the pavlov startegy

(Images credit: Netflix)